Research Symposium

24th annual Undergraduate Research Symposium, April 3, 2024

Kaya Simmons Poster Session 3: 1:30 pm - 2:30 pm /27

BIO

I am a second-year neuroscience major interested in non-human behavioral biology, ecology, and ornithology. I have been conducting research in the DuVal lab since my first semester at FSU, and plan to remain in this lab for the rest of my time as an undergraduate. I also conduct research in the Lemmon Lab, investigating the relationship between neuromodulators and social behavior in female Chorus frogs. Additionally, I am interested in conservation and hope to use the skills I've learned from this project to develop ways to apply machine learning to conservation efforts, such as detection and tracking of target species, or identifying and monitoring important behaviors. Once I graduate, I plan to complete a PhD and pursue research full-time.

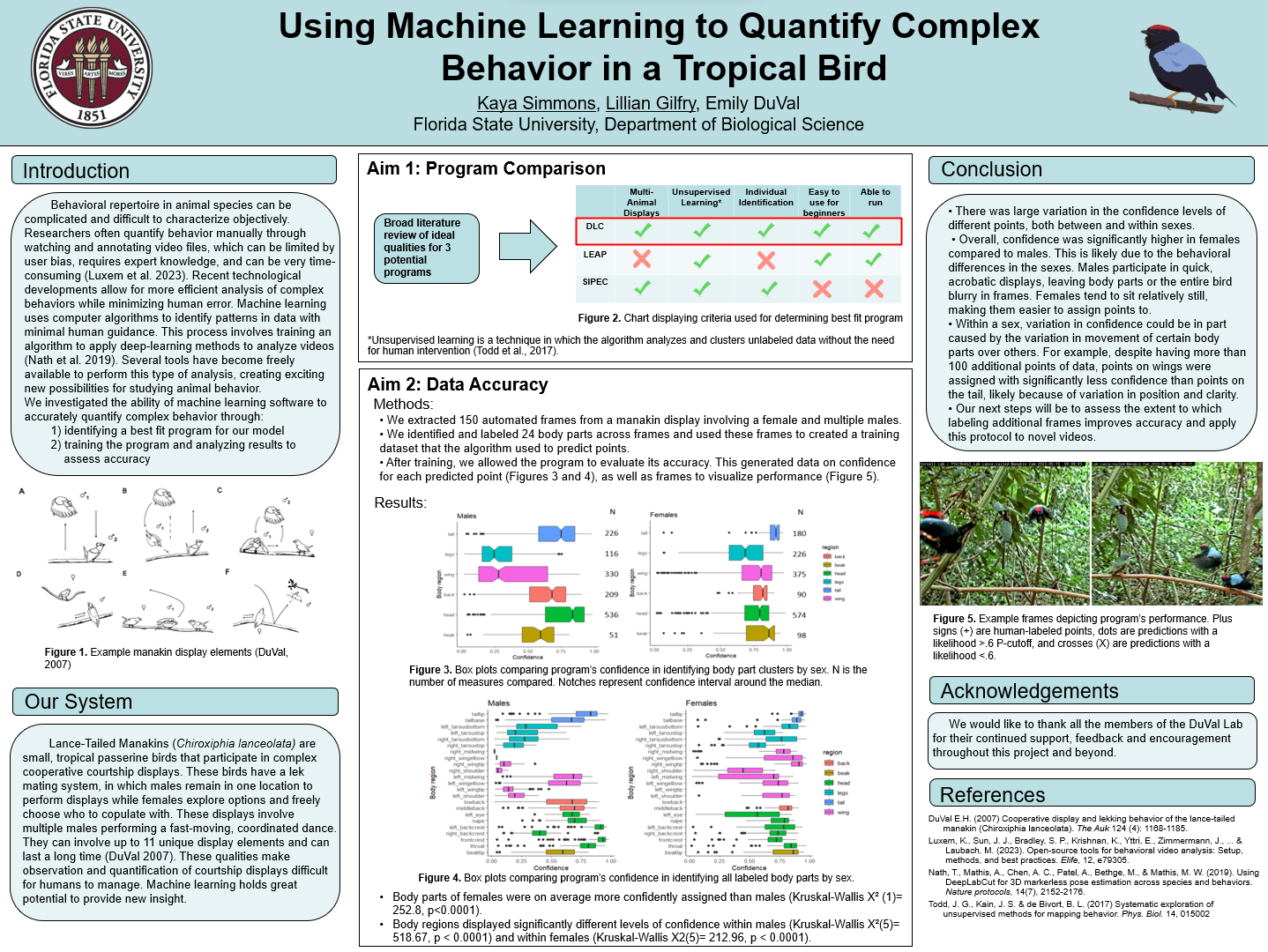

Using Machine Learning to Quantify Complex Behavior in a Tropical Bird

Authors: Kaya Simmons, Emily DuValStudent Major: Behavioral Neuroscience

Mentor: Emily DuVal

Mentor's Department: Biological Sciences Mentor's College: Arts and Sciences Co-Presenters: Lillian Gilfry

Abstract

Complex interactions are difficult to analyze, but are a key part of animal behavior. Lance-tailed Manakins (Chiroxiphia lanceolata) participate in complicated multi-male courtship displays that can be difficult and incredibly time consuming to analyze manually. The complexity of these interactions makes it challenging to understand variation among competing groups, and details in patterns can be overlooked by the naked eye. However, the computational approaches of machine learning can be used to analyze and quantify these behaviors, offering a potential solution to these problems. Here we assess the ability of machine learning software to accurately quantify complex behavior. We first used a broad literature review to identify the best fit program for our model, then trained the program and analyzed results to assess accuracy. We chose DeepLabCut because of its capabilities for unsupervised, multi-animal identification and tracking. We then trained the program through extensive frame-labeling of displays and evaluated its accuracy in predicting body parts. Our results found that the confidence levels for identifying different body parts varied greatly within and across sexes. Within males, there was low confidence for identifying points on the wings and legs, and greatest confidence for points on the head. Females had greater confidence overall, with the tail being the greatest and legs being the weakest. Following this initial test, we plan to return to labeling to generate more data to increase the accuracy of the program so it can be used to better interpret this complex behavioral repertoire.

Keywords: Machine learning, ornithology, animal behavior