Research Symposium

25th annual Undergraduate Research Symposium, April 1, 2025

Andy Gonzalez Poster Session 4: 3:00 pm - 4:00 pm/ Poster #18

BIO

Hi! I'm Andy Gonzalez, and I'm currently studying Computer Science at Florida State University. I was born in Panama City, Panama, spent my early childhood in Tampa, and later returned to Panama City for my more formative years. My research interests broadly include machine learning, computer vision, gesture sensing, and particularly their applications to biological and health-related phenomena. After graduation, I plan to pursue a PhD in Computer Science to further refine my research skills and eventually lead a research lab of my own. In my free time, you can usually find me catching a movie at the Student Life Cinema, reading at Dirac Library, or hanging out at the CS Majors Lab.

Bringing Large Language Models to Wireless Sensing

Authors: Andy Gonzalez, Dr. Xin LiuStudent Major: Computer Science

Mentor: Dr. Xin Liu

Mentor's Department: Department of Computer Science Mentor's College: College of Arts and Sciences Co-Presenters:

Abstract

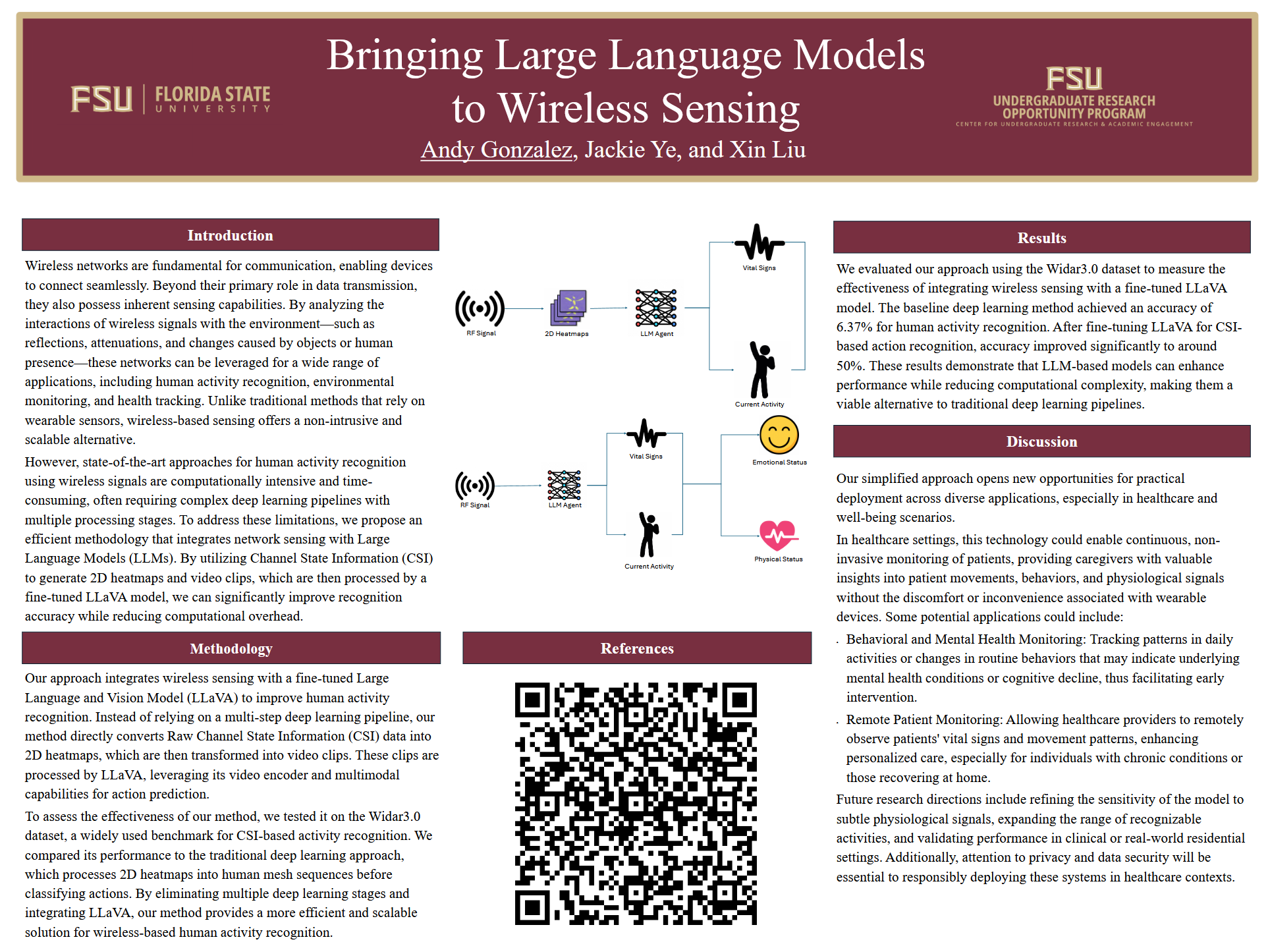

Wireless networks are fundamental for communication, enabling devices to connect seamlessly. Beyond communication, they also serve a dual purpose by supporting sensing capabilities. Through the analysis of wireless signals’ interactions with the environment, such as reflections, attenuations, and changes caused by objects or human presence. These networks can be leveraged for applications like human activity recognition, environmental monitoring, and health tracking, all without requiring wearable devices. Traditional approaches rely on multiple complex algorithms, such as the MUSIC algorithm and deep neural network, to transform raw Channel State Information (CSI) into 2D heatmaps, then into human mesh sequences, before predicting actions. This multi-layered architecture requires a lot of computational resources. To address this challenge, we propose a novel framework that integrates large language models (LLMs) into wireless network-based human action recognition. Our method eliminates the need for complex intermediate steps by directly converting CSI data into short video clips of BVP heatmaps. These clips are then processed using a Large Language and Vision Assistant (LLaVA) with a built-in video encoder. Through fine-tuning on the Widar3.0 dataset, our approach achieves a remarkable improvement in recognition accuracy—from approximately 6.37% to nearly 50%—while significantly reducing computational overhead. This streamlined design highlights the potential of combining network-sensed signals with vision-based language models, providing a fast, non-invasive solution for monitoring human activities in diverse environments. Our results underscore the promise of leveraging wireless signals and advanced language–vision architectures to develop robust and scalable sensing applications.

Keywords: Wireless Sensing, Large Language Models (LLMs), Machine Learning, Artificial Intelligence