Research Symposium

24th annual Undergraduate Research Symposium, April 3, 2024

Lynn Pierre Etienne Poster Session 3: 1:30 pm - 2:30 pm /461

BIO

Lynn Pierre Etienne is a third year junior majoring in computer engineering originally from Haiti. She has a deep passion for numbers, programming, robotics and the joy of teaching them to kids. Her fascination with robotics began in high school, where she actively participated in the robotics team and competed at VEX Robotics Competition. This experience ignited her dual interest in both coding devices and constructing hardware. As she looks toward the future, a significant part of her dream involves giving back to her home country, Haiti, where access to advanced technology is severely limited. By introducing and implementing cutting-edge technologies there, she aims to contribute to its development and advancement. Her career is not just a personal quest for knowledge and success but also a pathway to support her family and make a meaningful impact in Haiti, bridging the gap where technology is lacking. Alongside her studies, you will find her on campus as a member of Delta Sigma Theta Sorority Inc., FAMU-FSU Society of Women Engineers, FAMU-FSU National Society of Black Engineers and serving as the Volunteer Chair for Perfect Pair at FSU and as the Student Affairs Philanthropy Ambassador.

Object Recognition for Improved User Intent Inference for Robotic Lower-Limb Assistive Devices

Authors: Lynn Pierre Etienne, Taylor HigginsStudent Major: Computer Engineering

Mentor: Taylor Higgins

Mentor's Department: Department of Mechanical Engineering Mentor's College: FAMU-FSU College of Engineering Co-Presenters:

Abstract

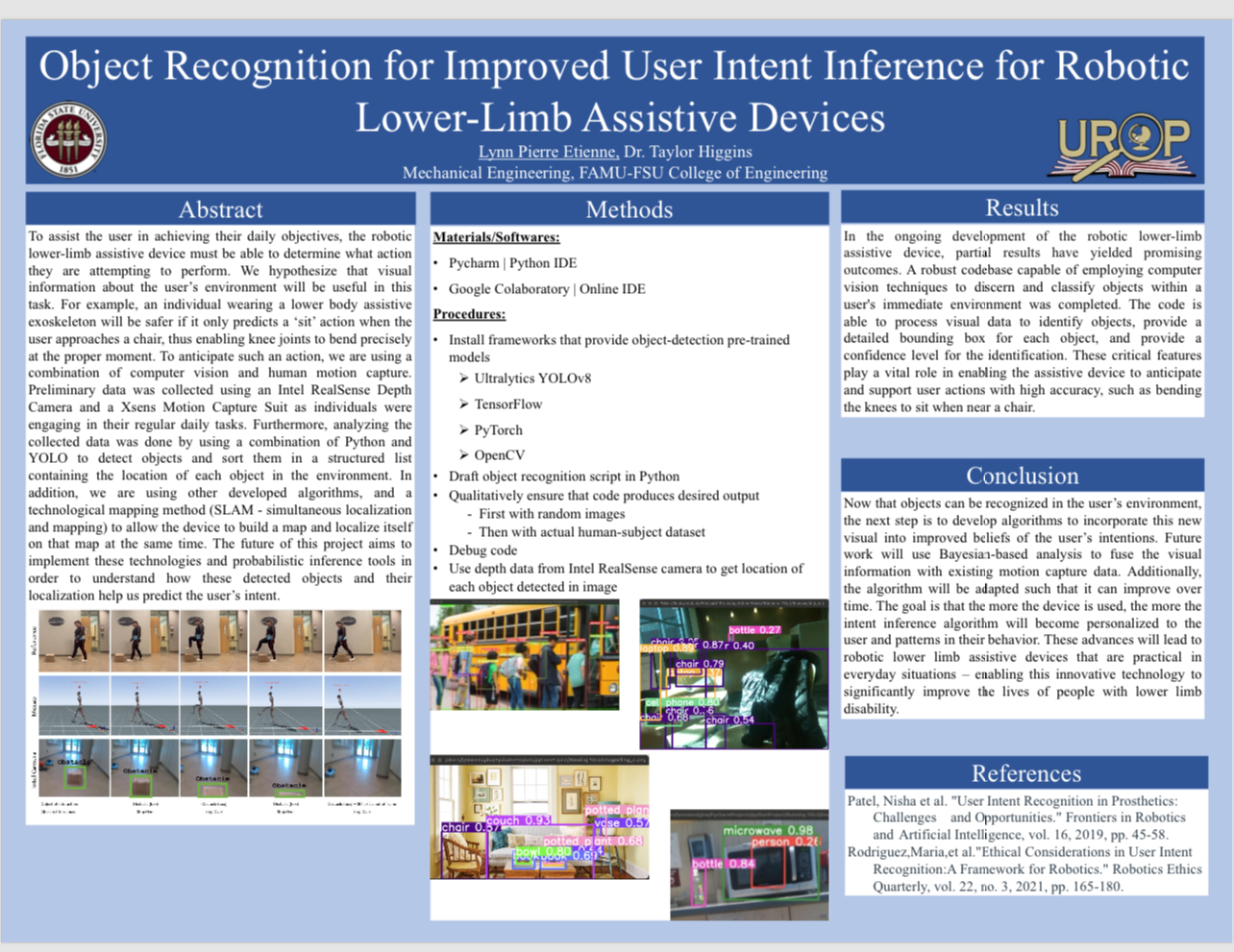

To assist the user in achieving their daily objectives, the robotic lower-limb assistive device must be able to determine what action they are attempting to perform. We hypothesize that visual information about the user's environment will be useful in this task. For example, an individual wearing a lower body assistive exoskeleton will be safer if it only predicts a 'sit' action when the user approaches a chair, thus enabling knee joints to bend precisely at the proper moment. To anticipate such an action, we are using a combination of computer vision and human motion capture.

Preliminary data was collected using an Intel RealSense Depth Camera and a Xsens Motion Capture Suit as individuals were engaging in their regular daily tasks. Furthermore, analyzing the collected data was done by using a combination of Python and YOLO to detect objects and sort them in a structured list containing the location of each object in the environment. In addition,

we are using other developed algorithms, and a technological mapping method (SLAM - simultaneous localization and mapping) to allow the device to build a map and localize itself on that map at the same time. The future of this project aims to implement these technologies and probabilistic inference tools in order to understand how these detected objects and their localization help us predict the user's intent.

Keywords: robotics, computer vision, python, YOLO, user inference, assistive devices, openCV