Research Symposium

24th annual Undergraduate Research Symposium, April 3, 2024

Valery Sastoque Poster Session 5: 4:00 pm - 5:00 pm/228

BIO

My name is Valery, and I am a senior at Florida State University currently pursing a bachelor's in Cell Molecular Neuroscience. I have been participating in research for the past 2 years, where I have garnered hands on expertise while also discovering my deep passion for this field. Currently I’m involved in two research labs at FSU: the Martin Memory Lab and the RAD Lab, also volunteering at Big Bend Hospice. My research interests are in cognitive and clinical neuroscience of memory and psychopathology, with future plans of medical school.

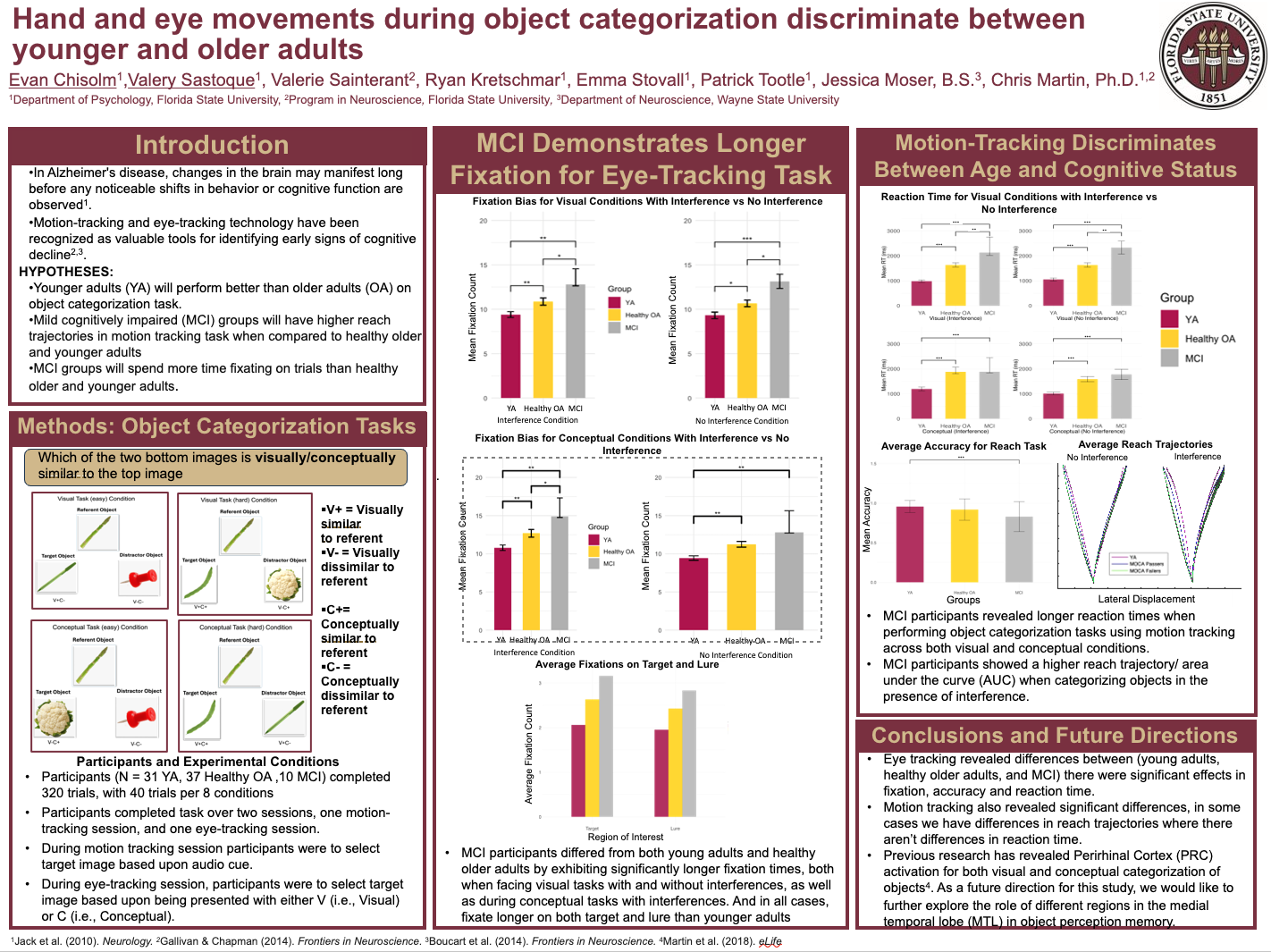

Hand and eye movements during object categorization discriminate between younger and older adults

Authors: Valery Sastoque, Chris MartinStudent Major: Cell Molecular Neuroscience

Mentor: Chris Martin

Mentor's Department: Department of Psychology Mentor's College: College of Arts and Sciences Co-Presenters: Jonathan Evan Chisolm

Abstract

The ability to flexibly categorize objects is an essential aspect of adaptive behavior. In complex environments with rapidly changing task demands, accurate categorization requires the resolution of feature-based interference. Recent neuroimaging and neuropsychological evidence suggest that perirhinal cortex allows us to group objects based on either their semantic or visual features when faced with cross-modal interference. We build on these findings by asking whether hand and eye movements made in the context of categorization tasks with cross-modal interference discriminate between younger and older adults. We additionally examined whether these behavioral indices track overall cognitive status in older adults. Three objects were presented on each trial: a referent, a target, and a distractor. Targets in the visual categorization task were visually similar to the referent, whereas distractors were semantically similar to the referent. Targets in the semantic categorization task were semantically similar to the referent, whereas distractors were visually similar to the referent. Categorization decisions were made by touching targets in our motion-tracking experiment and with a button press in our eye-tracking experiment. We found that reach trajectory and gaze, which are continuous measures of decision making, reliably discriminated between younger and older adults. In both cases, older adults were influenced by the distractors to a greater degree than were younger adults. Most interestingly, reach and gaze were significant predictors of overall cognitive function in the older adult group. These findings suggest that hand and eye movements may reveal subtle age-related changes in cognitive functions supported by perirhinal cortex.

Keywords: Memory, Alzheimer's, Motion tracking, Eye tracking