Research Symposium

24th annual Undergraduate Research Symposium, April 3, 2024

Hoang Vu Poster Session 3: 1:30 pm - 2:30 pm/60

BIO

Welcome to my profile! I'm Hoang, a student from Vietnam, and currently, I'm majoring in Computer Science. I love learning about data structures & algorithms, a powerful tool that helps me categorize and tackle coding problems efficiently. Outside of school, I love playing action games and watching thriller movies like most people. Large language models have captured my attention for a while, and thanks to UROP I had the opportunity to meet wonderful mentors who are very knowledgeable about this area and learn more from them. I hope to leverage the skills and knowledge attained from Florida State University to contribute to our shared knowledge of science.

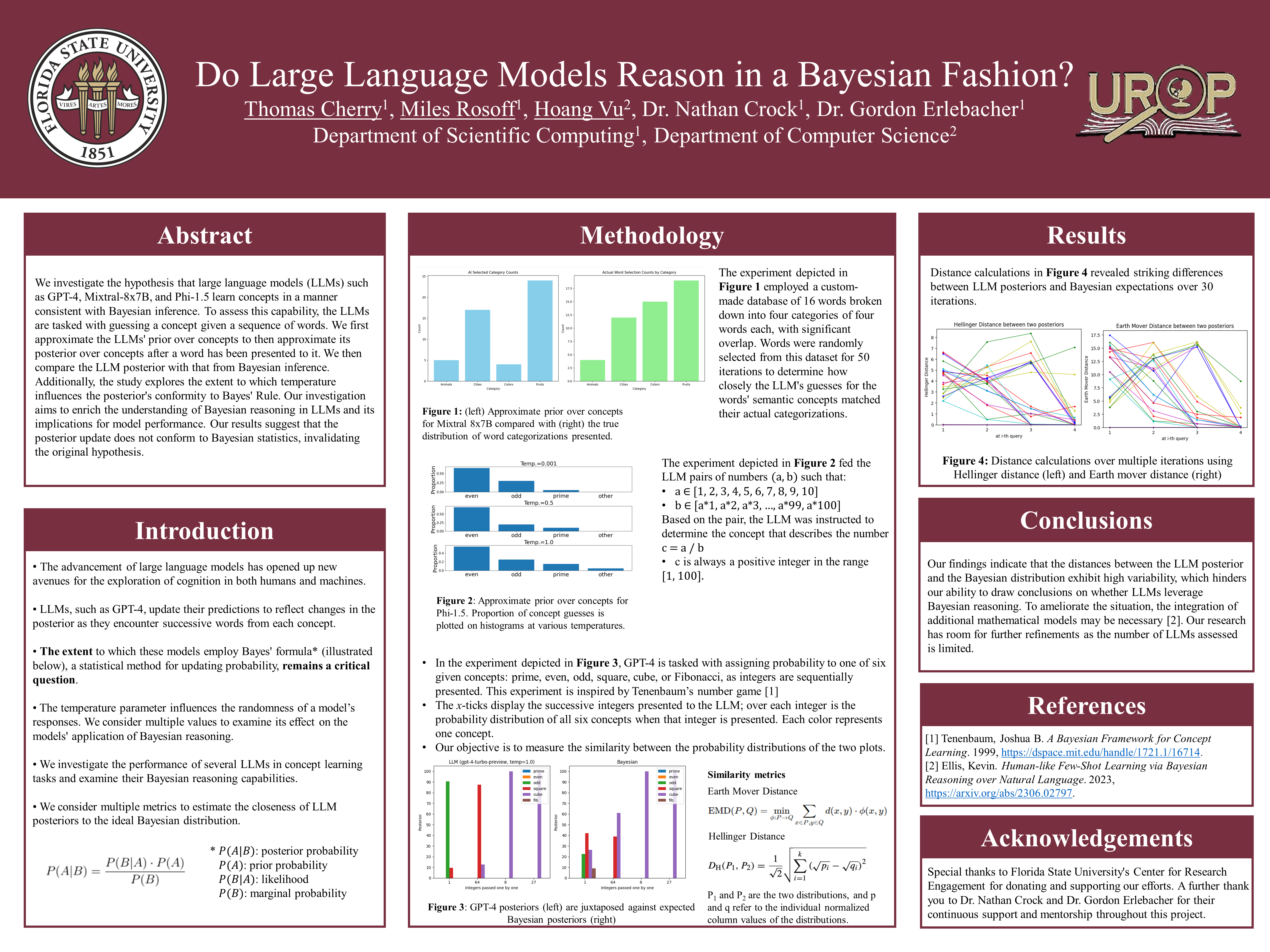

Do Large Language Models Reason In A Bayesian Fashion?

Authors: Hoang Vu, Gordon ErlebacherStudent Major: Computer Science

Mentor: Gordon Erlebacher

Mentor's Department: Scientific Computing Mentor's College: Florida State University Co-Presenters: Thomas Cherry, Miles Rosoff

Abstract

We investigate the hypothesis that large language models (LLMs) such as GPT-4, Mixtral-8x7B, and Phi-1.5 learn concepts in a manner consistent with Bayesian inference. To assess this capability, the LLMs are tasked with guessing a concept given a sequence of words. We first approximate the LLMs' prior over concepts to then approximate its posterior over concepts after a word has been presented to it. We then compare the LLM posterior with that from Bayesian inference. Additionally, the study explores the extent to which temperature influences the posterior's conformity to Bayes' Rule. Our investigation aims to enrich the understanding of Bayesian reasoning in LLMs and its implications for model performance. Our results suggest that the posterior update does not conform to Bayesian statistics, invalidating the original hypothesis.

Keywords: bayesian, math, large language model, LLM, AI